It was a summer day of 2024. So it’s already been more than a year, but I had no chance to share this experience. Better late than never, now I think it’s a good time to share it as I have a personal space to write about it.

KCCV 2024

In Korea, there is an annual conference called Korean Conference on Computer Vision (KCCV), where we share papers that are accepted to prestigious computer vision conferences, and that are published by Korean institutes or authors.

It’s actually a good chance for Korean researchers to network and mingle around, so professors often recommend attending it. Especially in KCCV 2024, the program chair of the conference was Minsu Cho, one of the head professors of my lab, and my labmates and I all had to go to Busan and help him out as staff members.

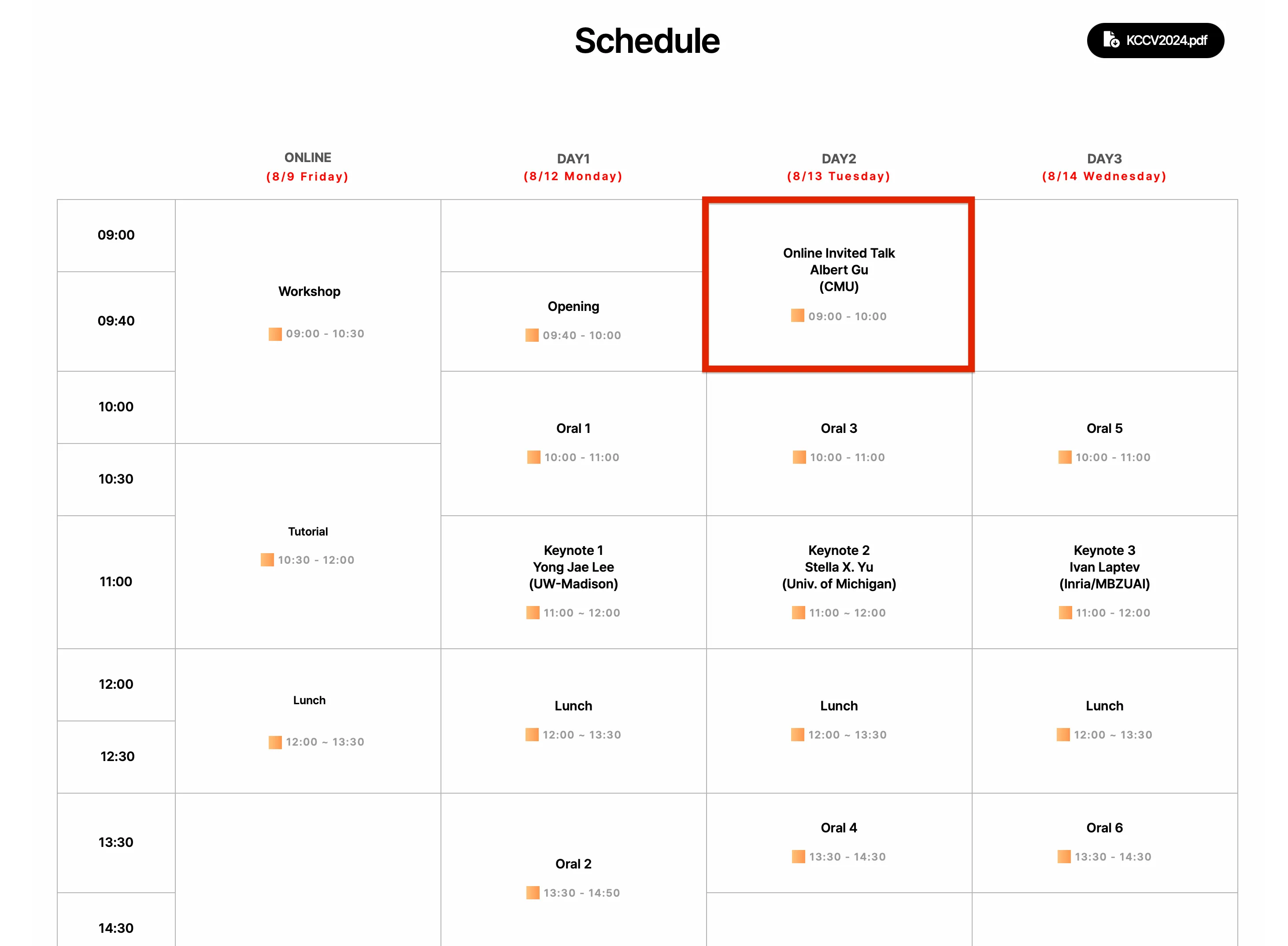

Since I was a staff there, I kinda had no chance to actively attend the conference compared to normal attendees. Nevertheless, I saw the schedule and had to surprise that a person that I’d never expect to appear will actually be a part of this event and will be giving a talk for us!

Who is Albert Gu?

Some of my labmates were not as surprised as I did, and it was somewhat understandable regarding what professor Gu is known for. For readers who also thought the same way, I’ll briefly go over who he is and why him coming to the conference made me excited.

Albert Gu is a professor at Carnegie Mellon University, and is known for being an author of the renowned paper Mamba, which I introduced in one of my previous posts Show information for the linked content . I have huge respect for him not mainly because he wrote the famous paper, but because his way of shaping his PhD research path was very cool.

Specifically, he did not choose to mix up trendy techniques and architectures to fill up his publications, and rather invented his own field, pursued his idea of adopting state-space models for sequence modeling, and eventually came up with an innovation that largely impacted the field. Actually, this should be every researcher’s dream right? I was very impressed by his research path, and was secretly following his research through his blog. I hope he finds this blog one day too haha.

Anyway, I had no clue how he ended up showing at (very local event) KCCV; maybe because one of the chairs Seon Joo Kim knows him some way, as his former student Sukjun Hwang is currently a member of Albert Gu’s group? No matter how, it was a great chance to actually see him speaking live and ask questions.

What I asked & the answer he gave

The title of his talk was, “Structured State Space Models for Deep Sequence Modeling”.

I was wondering how he would deliver his math-heavy ideas to general audience of KCCV, and in fact he didn’t go that deep until such worrisome range. If I remember it right, it was similar to the relatively recent post he uploaded to his blog, “On the Tradeoffs of SSMs and Transformers”.

I think the content of the talk was very appropriate in that it was discussing higher-level schema that many people would feel easier to understand. Yet, it was not really enough to solve questions in my mind that popped up while I was studying his work.

By the way, in Korea, people fear to come out and ask questions when taking lectures.

I took advantage of it, and made a question. The question I asked was,

We all know that transformers tend to model token-level relationships of the input. I thought Albert could provide some kind of similar intuitive explanations related to SSMs too.

Although it’s been more than a year, I still remember how his answer had begun.

I DON’T KNOW.

Of course the answer was longer, but that was exactly how it started.

I think I should’ve recorded the whole answer. I do not really remember what exactly came after, but he was definitely saying that the matrix no longer has explicit meaning it had in the earlier state space models (such as HiPPO), as it became more implicit and hard to interpret these days.

Next post tease

Well, if we do not know how and what SSM learns, it will be hard for us to find appropriate cases to reimplement SSM parameters.

Recently, I came up with a new way to interpret state-space models and I think it quite makes sense in many ways. It somehow answers my own question and explains what the matrix means from a higher level perspective. I also figured that my interpretation introduces a new way to define multi-dimensional SSMs, which is what many people in this field have been craving for.

I will be back with the post explaining my idea! Thanks for reading.

.png)