Accepted to ICLR 2026. 🎉

Check out the OpenReview page if you are interested in how it went!

Read PDF here.

What is data-specific neural representation?

By overfitting a network to represent exactly a single datum, you can actually achieve data compression by doing so; for instance, a famous branch of this study INR (implicit neural representation) overfits an image with a coordinate-based function .

If the function’s parameters are expressable with less bits than the actual image, you can store instead of the image itself to store the data. There are few other branches of work (e.g., neural compression) that tries to represent a datum with neural network, which made us come up with the term “data-specific neural representation”.

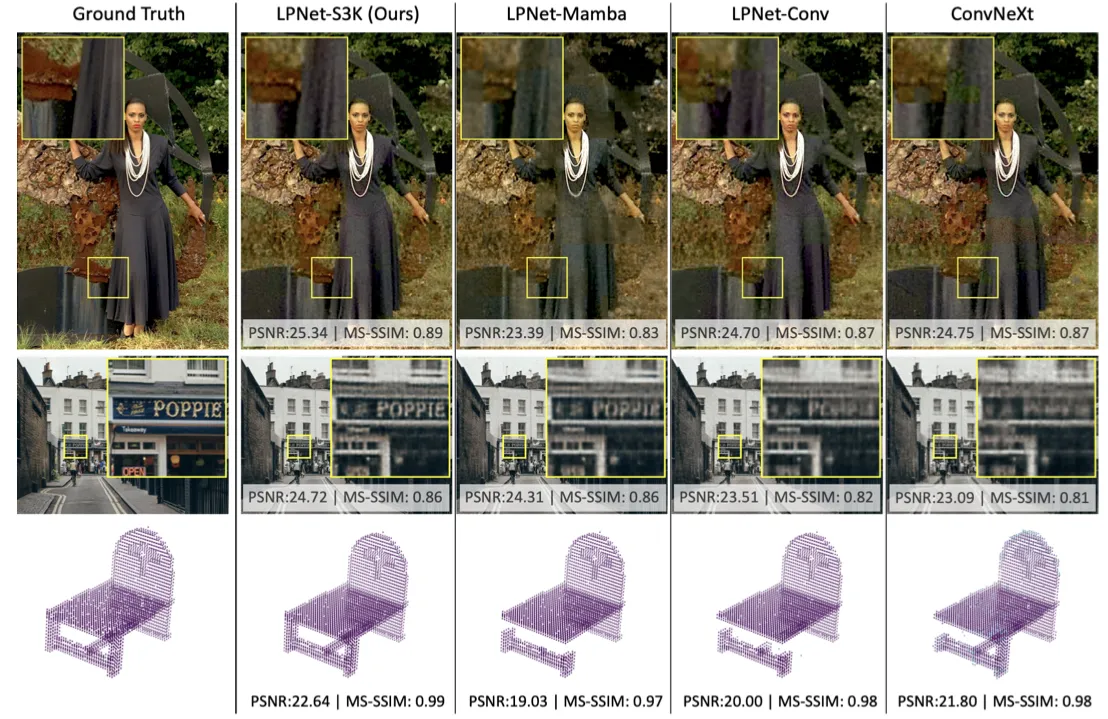

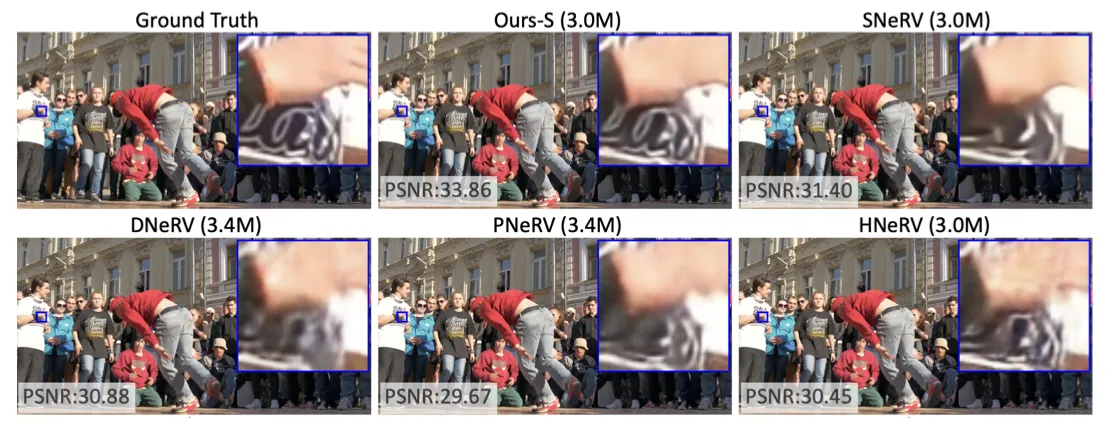

In this paper, we use compressive property of state-space models to achieve better compression:

under the same compression ratio, it shows better quality of reconstruction, or

with less number of parameters, it shows equal or better performance compared to others.

Abstract

This paper studies the problem of data-specific neural representations, aiming for compact, flexible, and modality-agnostic storage of individual visual data using neural networks. Our approach considers a visual datum as a set of discrete observations of an underlying continuous signal, thus requiring models capable of capturing the inherent structure of the signal. For this purpose, we investigate state-space models (SSMs), which are well-suited for modeling latent signal dynamics. We first explore the appealing properties of SSMs for data-specific neural representation and then present a novel framework that integrates SSMs into the representation pipeline. The proposed framework achieved compact representations and strong reconstruction performance across a range of visual data formats, suggesting the potential of SSMs for data-specific neural representations.

.png)